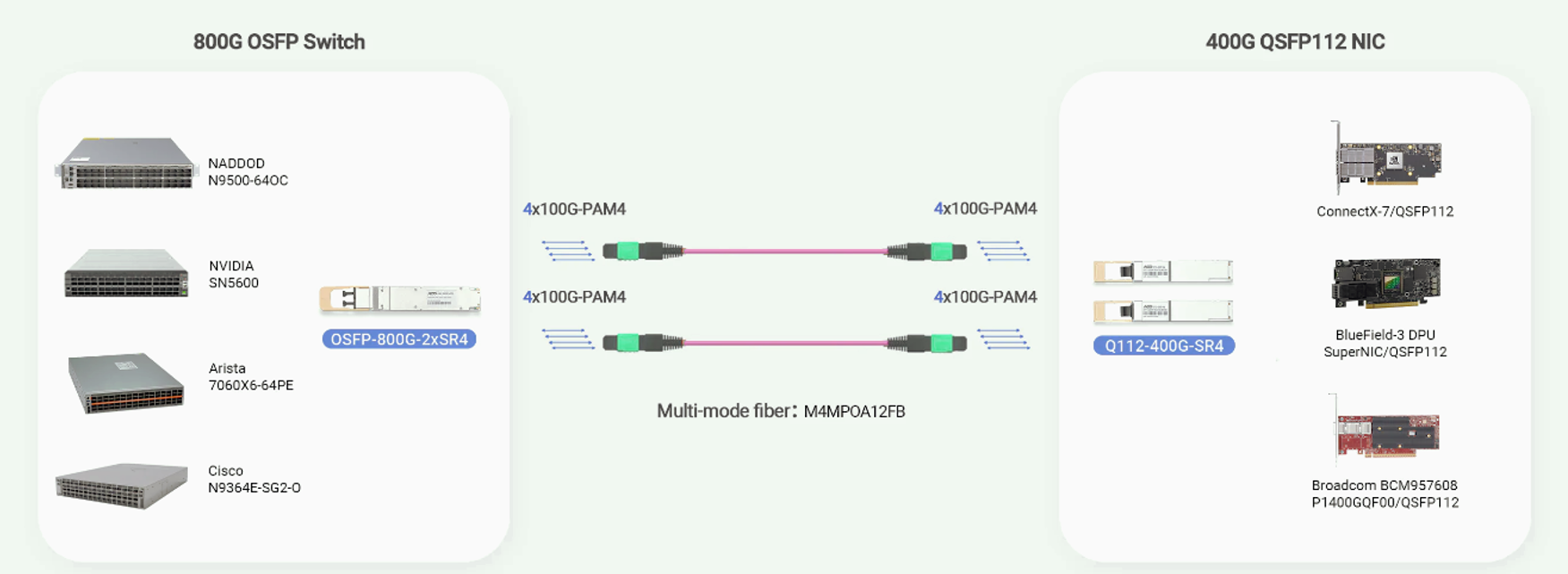

🚀 800G Switch to 400G NIC Interconnection Solution: Leveraging NVIDIA SN5600 and 4x100G Optics

As data center infrastructure continues to evolve toward higher bandwidth and AI-centric workloads, achieving efficient interconnection between next-generation switches and servers becomes critical. A common architecture today involves connecting 800G switches to 400G NICs (Network Interface Cards), ensuring optimized performance, scalability, and cost-efficiency.

This article explores a practical deployment model using NVIDIA SN5600 switches, 4x100G PSM4 optics, and Q112-400G-SR4 transceivers.

🛠️ System Components Overview

1. NVIDIA SN5600 800G InfiniBand Switch

-

Delivers industry-leading low-latency, high-throughput InfiniBand networking

-

Offers 800Gbps per port based on OSFP 800G interfaces

-

Designed to support scalable AI training clusters, high-performance computing (HPC), and dense enterprise data centers

-

Ideal for Spine-Leaf architectures with dense East-West traffic

2. 400G NIC – Q112-400G-SR4

-

A high-speed 400G network adapter that supports QSFP112 form factor

-

Typically installed on servers or AI accelerators like NVIDIA H100/H200 platforms

-

Handles compute-to-network high-speed uplinks with minimal latency

3. Optical Modules: 4x100G PSM4

-

Instead of using full 800G transceivers, 800G ports can be logically split into 4× 200G or 2× 400G lanes

-

In this solution, we leverage 4x100G PSM4 modules, enabling breakout to multiple NICs

-

PSM4 standard ensures 100G per lane over parallel single-mode fibers up to 500 meters (typical for intra-datacenter links)

🔗 How the 800G-to-400G Interconnection Works

-

The SN5600’s 800G OSFP port is configured into a 2x400G breakout mode.

-

Each 800G port connects to two 400G NICs installed on two different servers.

-

The optical layer uses 4x100G PSM4 modules at the switch side and Q112-400G-SR4 modules at the server NIC side.

-

Fiber cabling is designed based on MPO-12 to MPO-12 connections to maintain high-density deployment.

-

This topology ensures efficient resource utilization while preparing the infrastructure for future upgrades to native 800G end-to-end.

🌐 Advantages of This Deployment Strategy

| Feature | Benefit |

|---|---|

| Scalability | Simplifies expansion toward full 800G or 1600G future networks |

| Cost Efficiency | Reduces immediate hardware investment by utilizing 400G NICs |

| Performance | Supports ultra-low latency and high throughput (critical for AI workloads) |

| Flexibility | Allows mixed environments of 400G and 800G devices without major redesign |

📈 Typical Application Scenarios

-

AI Hyperscale Training Clusters (e.g., LLMs, GenAI)

-

Cloud Datacenter Upgrades (AWS, Azure, GCP equivalents)

-

High-Performance Computing (HPC) Facilities

-

Financial Trading Platforms (low-latency network fabrics)

📝 Conclusion

The combination of NVIDIA SN5600, Q112-400G-SR4, and 4x100G PSM4 optical modules provides a highly flexible and forward-compatible solution to bridge current 400G server deployments with 800G spine networks.

For operators building AI supercomputing clusters or upgrading hyperscale data centers, adopting this hybrid interconnect architecture ensures both cost efficiency today and readiness for future 800G/1600G environments.

Investing in a smart 800G-to-400G solution isn’t just about faster speeds — it’s about future-proofing your AI and cloud infrastructures.